Key Takeaways:

- Implement robust data scraping protection measures to prevent malicious purposes, such as unauthorised price scraping and the extraction of sensitive content from websites.

- Regularly analyse bot traffic to identify unusual patterns, which can indicate scraping attempts, and take action to block or limit access from suspicious sources.

- Use proxy servers to mask your website’s IP address and employ invisible links to deter scrapers, making it more challenging for them to access and extract valuable data.

Data is often referred to as the “new oil” in our ever-digitising world and, as such, is one of the most valuable assets an organisation can possess.

The collection and analysis of data may show important insights, enhance strategic decision-making, and keep businesses competitive. Where there’s valuable data, there will always be someone who wants to get it-and sometimes that means getting it through unauthorised means.

Data scraping, which is also referred to as web scraping or screen scraping, refers to the automatic collection of data from any websites or applications.

Although some types of data scraping are legitimate, such as search engines indexing a website, malicious or unauthorised forms of data scraping can cause breaches in a website’s intellectual property, consume extensive bandwidth, and eventually damage business models or competitive advantage.

In this comprehensive guide, we’ll be discussing exactly what data scraping is, why it is essential to guard against unauthorised scraping, and the strongest tools and practices that can put to work safeguarding websites from unauthorised data scraping.

Protect Your Brand & Recover Revenue With Bytescare's Brand Protection software

What is Data Scraping?

Data scraping refers to extracting information using either a bot or other automated script programs that can quickly draw large quantities from one website, another online service, product lists, prices of user reviews, and much more.

Whereas it can be a very valid activity when done in conjunction with competitive analysis, market research, or data aggregation, scraping often gets abused in order to pilfer proprietary content or siphon off sensitive personal information.

Webscraping opens websites because they usually expose data in a very accessible manner through HTML. Such scrapers can mine a huge amount of data efficiently using their scripts, which can read through page structures without human interference.

Legitimate vs. Malicious Uses

Scraping of data may perfectly be legitimate. Some of the examples are:

Search indexing: Public web pages are indexed by automatic crawlers employed by Google, Bing, and other search engines.

Price comparison sites: The facilities that track the prices of a certain product on numerous e-commerce sites to inform customers where to get it at the best price.

Data scraping for research and analytics: The analyst, journalist, or academic identifies trends or gets large data for studies.

However, data scraping also opens avenues to malicious activities, including:

Intellectual property theft: Competitors can scrape and republish copyrighted material.

Data harvesting for spam: Through this, big and targeted lists of email addresses or personal information are collected and sold or utilised in phishing activities by hackers.

Content duplication: Rogue sites may duplicate contents for artificially attracting web traffic to their sites at the cost of the search engine ranking of the original publisher.

What is the Difference Between Data Scraping and Data Crawling?

| Criteria | Data Crawling | Data Scraping |

|---|---|---|

| Primary Purpose | Systematically discovering and indexing web pages or documents | Extracting specific pieces of information (e.g., text, images, metadata) from web pages |

| Focus | Identifying new or updated content by following links (like a web spider) | Gathering structured/targeted data for analysis, storage, or reuse |

| Target | A broader range of websites and content, often following links recursively. | Specific websites and data points defined by the user. |

| Method | Sequentially visits links across websites to build an index or archive | Parses the HTML (or other structures like JSON/XML) on a page to locate and retrieve relevant data |

| Data Extraction | Indexes and stores discovered URLs and content, often without specifically extracting data points. | Extracts data based on pre-defined patterns and targets specific information. |

| Structure | Structured based on indexing principles, enabling efficient search and retrieval. | Often unstructured and requires cleaning and formatting for analysis. |

| Scope of Operation | Broad and site-wide, capturing as many pages or documents as possible | Narrow and targeted, focusing on specific data points or sections of a webpage |

| Usage | Search engine indexing, market analysis, trend identification. | Market research, price comparison, competitive analysis, lead generation (can be malicious or beneficial). |

| Output | A list or database of URLs, page metadata, or basic snapshots | Structured data (e.g., CSV, JSON) containing extracted fields such as product prices, contact info, or article text |

| Frequency | Regular or scheduled crawling to keep content indices fresh | On-demand or scheduled to extract certain data as needed |

| Ethical/Legal Concerns | Primarily revolves around obeying robots.txt and not overwhelming servers | Ensures compliance with site terms of service, copyright laws, and user privacy regulations when collecting and using scraped data |

Essentially, crawling is about discovering and indexing, while scraping is about targeted extraction.

Crawlers can be used as a component within a scraping system, where the crawler discovers relevant URLs and the scraper extracts the specific data from those pages.

Protect Your Brand & Recover Revenue With Bytescare's Brand Protection software

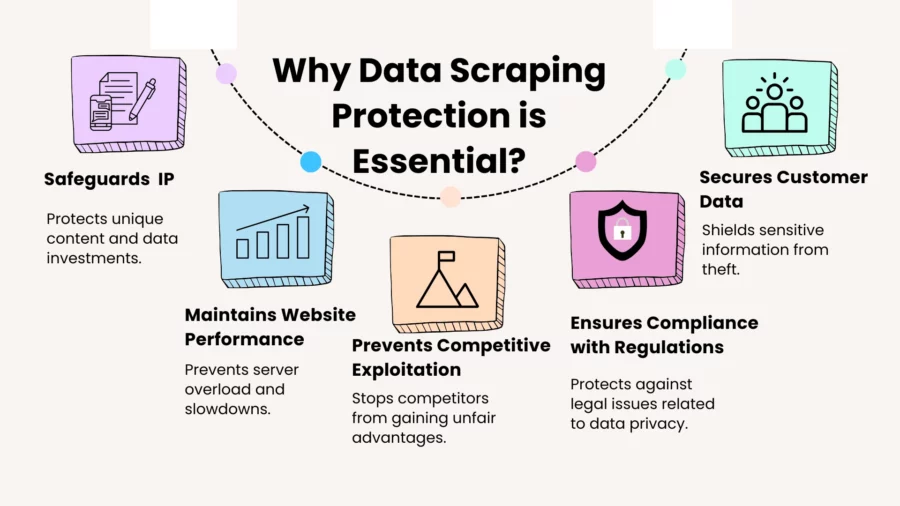

Why Data Scraping Protection is Essential?

Protection of Intellectual Property

This means most websites have spent valuable resources and finances on generating unique content that might involve text, images, product information, or even other types of value-added data.

The actual value of that original data gets compromised in the event of scrapping without any permission to being used elsewhere.

Maintaining Website Performance

Data scraping results in website servers being burdened with too much repeated requesting inside a short period, which can slow it down or even bring a server to its knees if the system is unable to bear such high volumes of traffic.

Over time, this can degrade the user experience for legitimate visitors.

Preventing Competitive Exploitation

Very often, competitors use scrapers in price, inventory, or marketing strategy intelligence.

If left to their own devices, scrapers can give your competitors an unfair competitive advantage by copying or undercutting your strategy.

Compliance with Data Protection Regulations

With high concerns for privacy and data protection, it is of utmost importance that no unauthorised scraping leads to the exposure of personal information or to breaching legislation such as the GDPR or CCPA. This could get really serious legally.

Securing Customer Data

Besides this, scraping can breach other e-commerce sites or any service containing sensitive customer information where personal data can be stolen: names, addresses, e-mail addresses, credit card details.

Techniques of Data Scraping

Before going into the protection strategies, let’s take a look at some common techniques that scrapers use to extract data from websites:

HTML Parsing

This is the most unsophisticated technique whereby the scraper downloads the webpage and directly extracts data from the structure of the HTML.

It generally depends on some specific tags or patterns in the HTML markup that contain titles, images, and links from which the useful data can be extracted.

DOM Parsing

DOM parsing means data extraction is done by navigating the JavaScript-rendered elements of the webpage.

Scrapers can interact with the webpage just like a browser would, executing JavaScript and interpreting the dynamic content that gets rendered.

API Scraping

Other scrapers take advantage of open or unsecured APIs to extract data in a more direct way. APIs are for data sharing, but they can often be abused by scrapers, especially when they have low authentication or rate-limiting protections.

Headless browsers

Headless browsers, such as Puppeteer or Selenium, can load a webpage completely for a scraper, even to the point where it renders dynamic content through JavaScript, without showing a user interface.

This enables more sophisticated scraping, which emulates human behavior and borrows through simple protection mechanisms.

Data Harvesting with Proxies

Scrapers would not want to get detected, so they mask their actual IP addresses through proxy networks. This way, by cycling through thousands of proxies, they are able to distribute their requests and thus avoid IP blocking, making the tracing of the location of a scraper impossible for the owners of the website.

Protect Your Brand & Recover Revenue With Bytescare's Brand Protection software

Challenges in Protecting Websites from Scraping

Scraping protection does not come on a one-size-fits-all basis. Several factors make the job tough in one way or another for a website owner, developer, and security professional.

Automated Detection

Scraping tools can simulate human behavior, such as browsing the website just as any legit user would. This makes it also tough for the security system to tell apart human visitors from earnest bots, especially in case of headless browsers, since they would act out what a user would.

Rate Limiting Evasion

It’s an approach on most websites to put a limit on the number of requests one user can send to the server over time.

To beat this, scrapers spread out requests over different IP addresses-or rotating proxies-and/or delay to circumvent the rate limits.

JavaScript Rendering and Dynamic Content

Most content of modern websites loads dynamically with JavaScript, meaning that a scraping tool has to be able to run JavaScript to get the content.

This opens up a whole new dimension of problems in detecting and preventing scraping by users using sophisticated scraping tools which can interact with dynamic content.

Legal and Ethical Considerations

Where there are loads of valid reasons for protection against data scraping, a variety of ethical and legal issues arise for companies in terms of how they attempt to protect themselves.

For example, blocking legitimate users due to overzealous security or breaking privacy laws entails heavy legal consequences.

Techniques for Data Scraping Protection

IP Rate Limiting and Blocking

The most simple way to reduce scraping attempts is based on the limitation of the number of requests from one IP within a short run of time.

Rate limits will deter scrapers from DDoS’ing a website server and let them know traffic patterns that generally appear abnormal.

If an IP exceeds some threshold, it may get temporarily or permanently blocked. However, this can be circumvented if scrapers utilise proxy networks, so it’s generally used in conjunction with other methods.

CAPTCHAs and CAPTCHA Alternatives

CAPTCHAs are used very often to make sure that the users are humans and not bots.

If a user performs some kind of suspicious action, like submitting a form or trying to get to a certain part of a website, he or she will be challenged to solve a CAPTCHA challenge.

There exist several forms of CAPTCHAs:

- Image CAPTCHAs: Where users identify objects in images.

- Text-based CAPTCHAs: The user has to type in distorted characters.

- Invisible CAPTCHAs: This monitors the interaction for patterns that seem suspicious or bot-like, and it fires only a CAPTCHA when needed.

One CAPTCHAs can be very effective but could degrade the user experience, mainly for the legitimate users. Alternatives like honey pots or JavaScript challenges (where a small JavaScript challenge runs behind the scenes to check for bots) can be used to mitigate this issue.

One CAPTCHAs can be very effective but could degrade the user experience, mainly for the legitimate users.

Employing alternatives to honey pots or even JavaScript challenges whereby a small running JavaScript challenge in the background checks if it is a bot will fix this issue.

Bot Detection and Fingerprinting

Advanced bot-detection services have the ability to distinguish whether the website has a human visitor or a scraper through behavioral analysis.

In this case, it uses machine learning models of device fingerprints, session data, browsing patterns, and algorithm analyses to identify suspect traffic.

Some of the common approaches are:

- Behavioral analysis: It is enabled to find the patterns, like mouse movements, clicks, and scrolling, typical for humans but not typical for bots.

- Device fingerprinting will involve parsing the browser, operating system, screen resolution, amongst other data, to identify if the traffic is coming from a known bot or a real user.

Fingerprinting works very well to identify advanced bots, even in the case of those using proxies or emulating human interactions.

Content Obfuscation

Content obfuscation is the most popular strategy for deterring data scrapers.

Examples of how this is used are encrypting or encoding data that may be useful, like prices, email addresses, and phone numbers, into an unreadable form that the scrapers have to decode to read.

Another approach involves lazy loading, where content is loaded only when required; this would prevent scrapers from gathering volumes of data in one instance.

Advanced Web Application Firewalls (WAF)

A WAF acts like a shield between the server of a website and incoming traffic.

A modern WAF is able to detect malicious patterns of traffic and block scraping bots by looking for known signatures and behaviors in traffic.

WAFs can also utilise machine learning to evolve with new techniques being used for scraping over time.

Monitoring and Logging

It is also very important to regularly check the site traffic and server logs for suspicious activities.

Logs can reveal some patterns, like high-rate requests from a single IP address, non-human-like browsing behavior, or high 404 rates, commonly showing the presence of a scraping attempt.

It is possible to set up alerts and automated responses that can help mitigate scraping attacks in real-time, which may include temporary blocking of an IP, initiating CAPTCHA challenges, or even launching more advanced bot-detection protocols.

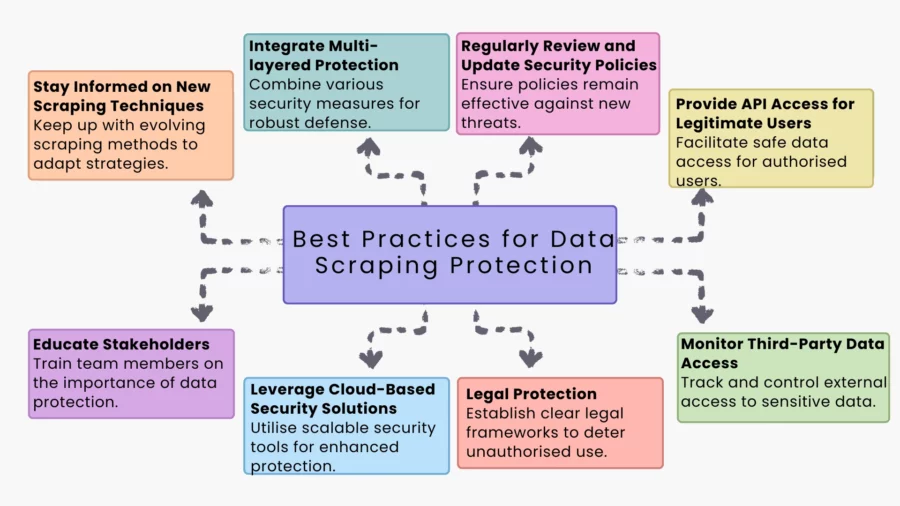

Best Practices for Data Scraping Protection

Integrate Multi-layered Protection

It is not advisable to depend on one kind of protection like CAPTCHA.

Protection should be done in layers: IP rate limiting, bot detection, WAFs. This would make it really hard for scrapers, which would have to evolve or give up the attack altogether.

Stay Informed on New Scraping Techniques

Scraping techniques continue to evolve, with new tools developed every day to overcome securities.

A secure website means being informed of the most recent scraping tactics and constantly updating its protection methods.

Educate Stakeholders

Educate your team on the risks of data scraping and how to identify potential threats.

Developers should know the general methods of scraping, while marketing teams should be able to identify how to best protect sensitive content.

Engage in regular cybersecurity experts or consultants to ensure that you keep up with the evolution of scraping threats and protection methods.

Leverage Cloud-Based Security Solutions

Most cloud providers now offer sophisticated security solutions specifically geared toward preventing web scraping. These services make use of large-scale threat intelligence to identify and block scraping traffic emanating from known bad actors.

For example, Cloudflare has a bot management solution that can be tuned to one’s specific needs.

Offloading some of the workload of analysing and protecting against traffic onto a cloud-based service can ease the burden on your own infrastructure.

Provide API Access for Legitimate Users

For companies whose business models involve large volumes of data-for example, weather services, financial data, and product listings-a controlled API availability can effectively let legitimate users to access data while blocking unauthorised scrapers from harvesting large volumes.

You can do this by making an API available for various accesses, such as authentication, rate limiting, and quotas.

Regularly Review and Update Security Policies

Scraping technologies and modes of attack change rapidly. In order to keep your defenses strong, regularly revisit your strategy for protection against scraping.

You need to update your firewall rules, CAPTCHA challenges, rate limits, and other protections based on changes in scraping behavior.

Regular testing through security audits and penetration testing will help you find potential weak points in your system.

Monitor Third-Party Data Access

Third-party services, which have been granted access, or other affiliates may cause easy exposure of it to scrapers.

Hence, it’s very important regularly to audit and track third parties’ activity in your website or service to make sure they are not inadvertently or deliberately causing data leakage.

Legal Protection: Terms of Service and Copyright Notices

While these technical means are crucial, legal tools are just as important.

Clearly stating in your website’s Terms of Service that scraping is not allowed gives you grounds to sue if someone does scrape your website.

Putting copyright notices on key content can further help assert your ownership and give you cause for takedown requests if your data is scraped or stolen.

The Future of Data Scraping Protection

A far-from-over war against data scraping is going to further complicate things.

Scrapers will become further sophisticated, their advanced AI and machine learning models able to emulate human behavior in an even more convincing manner.

To such development, security measures will have to scale up, with much more emphasis on automation and real-time analysis.

AI-Powered Bot Detection

In the future, more sophisticated AI-driven solutions will play a central role in protecting websites from scraping.

These systems are constantly learning about traffic patterns, identifying bots, and adapting to new scraping methods much quicker.

Thus, analysing large volumes across a plethora of websites, AI systems find out emerging scraping threats that may emerge at a specific website.

Enhanced Use of Machine Learning

The first steps toward man-machine intelligence in web security are already happening on-site web scraping detection and fraud prevention.

These models will evolve to be adept at distinguishing between human and bot visitors based on behavior and interaction.

Blockchain Technology for Data Protection

Research is ongoing to provide better data protection using blockchain-based solutions.

For example, blockchain can offer a decentralised approach for verifying the ownership of data and tracking access requests for scraping or viewing particular datasets, ensuring only authorised users are allowed to do such actions.

This might just prove to be a game-changer in bringing transparency and control with regard to the usage of data.

Collaborative Security Networks

In fact, the more scraping is a problem, the more collaborative security networks focused on sharing intelligence about scrapers could proliferate.

Such networks pool information about malicious IPs, scraping tools, and attack patterns for a collective defense against scraping. This could be especially useful for e-commerce websites, which are quite often the targets of scraping.

What’s Next?

Web scraping protection would be a part of modern website management.

As the sophistication keeps increasing, with the range touching from basic HTML parsing to advanced ones using headless browsers and machine learning-driven scraping bots, one can imagine how proactive their approach needs to get.

It is highly recommended that you implement such a defense-in-depth strategy that includes IP rate limiting, CAPTCHA challenges, bot detection, and obfuscation of content.

Furthermore, such a solution to unauthorised data scraping can be made effective by keeping up with the latest emerging trends, using security solutions on the cloud, and offering legitimate API access.

While data scraping protection can be complex and resource-intensive, do not forget that the long-term benefits of securing your IP, ensuring compliance with data privacy regulations, and safeguarding your website’s performance far outweigh the costs. This is because, with technology and tactics continuing to evolve in regard to scraping, a defense mechanism must be adaptive and robust.

Book a demo today to see how Bytescare can protect your digital content and let you rest easy. From its many features, Bytescare is here to protect the things that mean most to you in a digital world.

The Most Widely Used Brand Protection Software

Find, track, and remove counterfeit listings and sellers with Bytescare Brand Protection software

FAQs

What are the most common methods for protecting against data scraping?

The most common methods include rate limiting (restricting requests per IP), IP address blocking, user-agent analysis (identifying bots), CAPTCHA implementation, honeypot traps (luring and identifying bots), JavaScript obfuscation (making code harder to read), dynamic content loading, and using web application firewalls (WAFs). A multi-layered approach is most effective.

What role does IP blocking play in data scraping protection?

IP blocking prevents access from specific IP addresses known to be associated with scraping activity. However, its effectiveness is limited as sophisticated scrapers use rotating IPs and proxies to circumvent blocks. It’s more effective as part of a larger protection strategy.

Are there legal implications associated with data scraping and how can they be mitigated?

Yes, scraping copyrighted content or personally identifiable information without permission is illegal. Mitigation involves:

Terms of Service: Clearly prohibiting scraping in your website’s terms of service.

Copyright Notices: Displaying clear copyright notices on your content.

Robots.txt: Using robots.txt to discourage ethical scrapers, although it’s not legally enforceable.

Cease and Desist Letters: Sending letters to identified scrapers.

Legal Action: Pursuing legal action for copyright infringement or other violations.

What technologies or tools can help enhance data scraping protection for websites?

Several tools and technologies enhance protection:

Data Scraping Protection Services: Specialised services offer comprehensive protection, including bot detection, rate limiting, and IP blocking.

Web Application Firewalls (WAFs): WAFs filter malicious traffic and can be configured to block scraping attempts.

Bot Management Solutions: These solutions specialise in identifying and mitigating bot activity, including scrapers.

Monitoring and Logging Tools: These help track website traffic and identify suspicious patterns indicative of scraping.

How can companies detect if their data is being scraped?

Detection methods include:

Monitoring Website Traffic: Analysing server logs for unusual traffic patterns, such as a high number of requests from a single IP or unusual user-agent strings.

Checking for Duplicate Content: Searching for copies of your content on other websites.

Setting up Honeypot Traps: Identifying scrapers when they interact with these hidden traps.

Using Website Monitoring Services: These services can alert you to suspicious activity and potential scraping attempts.

What role does CAPTCHA play in preventing data scraping?

CAPTCHAs present challenges that are easy for humans but difficult for bots. They act as a gatekeeper, preventing automated scrapers from accessing protected content or submitting forms. However, advanced scrapers are sometimes able to bypass CAPTCHAs using OCR or CAPTCHA-solving services, so they are not a foolproof solution on their own.

Ready to Secure Your Online Presence?

You are at the right place, contact us to know more.