Key Takeaways:

- Use CAPTCHA, rate limiting, and IP blocking to detect and prevent malicious search engine spiders from accessing data.

- Obfuscate key content, use dynamic content loading, and disable right-click to deter easy copying or unauthorised access.

- Regular intervals analyse logs for unusual activity, set honeypots, and employ user-agent filtering to identify and block scraper actions.

Your website is more than just a place to put things. Your unique content is all stored on this valuable asset.

What do you do when scrapers come steal your hard-earned data? Website scraping is becoming a bigger problem for companies of all kinds. This is from rivals who steal prices to bots that collect information.

That unethical and can do a lot of damage to your brand’s reputation.Imagine putting in a lot of effort to make original content only to have it used by someone else without your permission.

The bad news is that web scraping is not just a technical problem. It’s also a real threat to businesses.

And while you can’t stop every bad actor on the internet, you can make it much harder for them to exploit your site.

How to protect website from scraping while still giving genuine users a smooth experience? Using the right tools to keep your info safe is the answer.

We will look at practical ways to avoid those annoying scraper actions. Let’s look at some ways to keep your website safe!

Protect Your Brand & Recover Revenue With Bytescare's Brand Protection software

What is Web Scraping?

It is the process of using automatic tools to get information from websites.Think of it as a way for computers to read web pages’ content like how people save simple images.

Even though web scraping isn’t bad by itself. But how it’s used can make it good or bad.

Legitimate Uses of Web Scraping

Web scraping powers many services we use every day. Search engines like Google rely on it to index web pages and make them searchable.

Price comparison websites use scraping to collect product catalogs from various stores. It’s also used by researchers to gather public data for studies. Web scraping can be a powerful tool for creativity if it is done in an honest way.

Harmful Scraping

Not all scraping is done with good intentions. Some websites are targeted by dedicated scrapers who steal actual content to reuse it without giving proper credit.

Others scrape pricing data to get ahead of rivals. Search engine bots may also collect email addresses which can lead to scams.

Not only do these actions break intellectual property rights but they can also hurt companies by taking network traffic. As a result it makes regular users less likely to trust them.

Why Should You Protect Your Website from Scraping?

Web scraping might seem harmless at first glance, but when it’s done unethically, it can have serious consequences for your business.

Preventing from scraping your website isn’t just about keeping your data safe. It’s also about keeping your edge over your competitors. This is why it’s important:

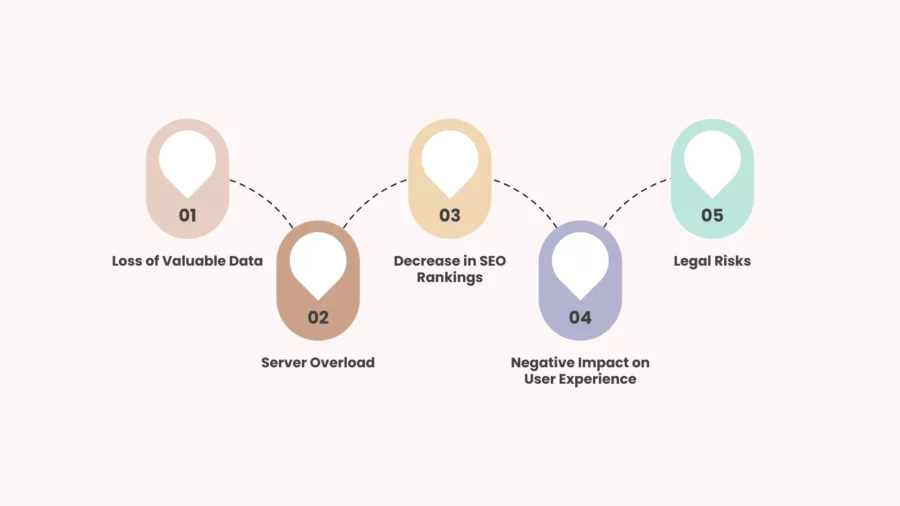

Loss of Valuable Data

Your website is a treasure trove of information—individual article content, product details, product prices, and more. Clever scrapers can use this information to their own advantage. It involves making copies of your websites or copying your actual content. This theft could make you less competitive.

Server Overload

Scrapers often send an overwhelming lots of requests to your server, consuming bandwidth and resources meant for genuine users. This could cause pages to load slowly which would be annoying for your visitors. As a result this could make them go to a rival instead.

Decrease in SEO Rankings

If scrapers copy and republish your content from websites, search engines may struggle to identify the original source. This duplication can hurt your search rankings which makes it harder for people who might buy from you online to find you.

Negative Impact on User Experience

People may not like your brand if your website is slow because of scraping. Some kinds of scraper even worse may use your data improperly. As a result this could lead to audience mistrust.

Legal Risks

Unauthorised scraping of human user data or private information from your website can lead to compliance violations, especially with regulations like GDPR. Protecting your site lowers these risks while making sure that people will trust it.

Protect Your Brand & Recover Revenue With Bytescare's Brand Protection software

Common Signs Your Website is Being Scraped

| Sudden Spike in Traffic | A significant rise in traffic from sources you don’t know could be a sign of automatic scraping bots. |

| Unusual Activity Patterns | Accessing the same pages often at odd times could be a sign of bot activity. |

| High Bounce Rate | Simple scrapers don’t browse. They just extract data which increases bounce rates. |

| Overloaded Server | Search engine bots sending subsequent requests can overwhelm your server load, causing slow load times or downtime. |

| Frequent Requests from the Same IP | If you get a lot of requests from the same IP address in a short amount of time that’s a sign that someone is scraping. |

| Duplicate Content Found Online | If your actual article appears on other websites without your consent, it might have been scraped. |

| Abnormal API Usage | A scraper program can be right after your API if it shows a lot of calls that are not related to its planned use. |

| Strange User Agents | It’s common for scraping bots to use fake user agents instead of real browsers. |

| Increased Bandwidth Usage | It’s not a good sign when internet use goes up without corresponding increase in legitimate user activity. |

| Missing Data | Scrapers may tamper with or extract specific elements, leaving your data incomplete or altered. |

How to Protect Website from Scraping?

Web scraping can make you feel like someone is taking your data. Don’t worry you can do something!

You can protect your valuable content along with making sure that legitimate users have a smooth experience by using the right strategies . Let’s look at ways to stay safe!

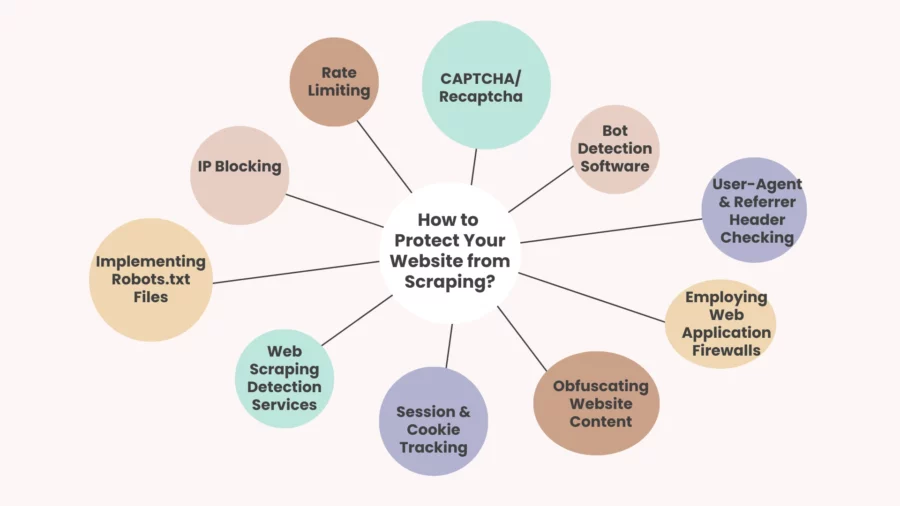

Implementing Robots.txt Files

Any web crawler can use a robots.txt file to find out which parts of your website they can access. You can set up your robots.txt file with rules that will stop scraping. To block access to sensitive directories you could:

User-agent: *

Disallow: /private/

Disallow: /admin/

This instructs all bots not to limit access to the folders that are mentioned. You can also go after individual bots by changing * with the names of their user agents.

But even though robots.txt is useful it’s not perfect. Ethical bots such as search engine robots respect it. But scrapers that are out to do harm ignoring these rules. Newbie scraper writers can see what you’re trying to hide because the file itself is widely available.

Robots.txt is a good start but it should only be one part of a broader anti-scraping strategy. Other strong measures should also be used like IP blocking.

IP Blocking

A simple way to stop harmful scrapers from accessing your website is to block their IP addresses.

Using server settings you can stop IP addresses or groups that display non-human activity by making a lot of identical requests.

A lot of cloud hosting services let you block IPs right in the server settings. You can also use more powerful services such as Cloudflare which examine how data flows. It also automatically stop IPs that look sketchy.

The number of calls an IP can make in a certain amount of time is often limited by rate limiting which is included in these tools.

IP blocking works but it has limitations. Smart scrapers can get around limits by using proxies. To protect yourself from this use more than just IP blocking. You can use CAPTCHA or user-agent filtering along with IP blocking.

Rate Limiting

Rate limit is a powerful technique to prevent web scraping by controlling the number of requests a fake user accounts or IP can make within a specific time frame.

While stopping suspicious high traffic from scrapers it ensures that genuine users can access your website without any issues.

To set boundaries such as allowing only 100 requests per minute per IP basis you can use tools like Amazon Web Services WAF. If a user exceeds this limit, their access is temporarily blocked or throttled.

By setting time intervals for requests, rate limit makes it harder for bots to scrape large amounts of data quickly. Legitimate users typically browse at a slower pace, so this strategy doesn’t impact their experience.

However, keep in mind that advanced scrapers may use rotating IPs to bypass limits. To strengthen your defense, pair rate limit with IP blocking, CAPTCHA, and other anti-scraping measures.

CAPTCHA/Recaptcha

An effective means to stop automatic bots in their tracks is to add CAPTCHA to your website. Bots have a hard time with these tools because they ask users to do typing text that is jumbled.

CAPTCHA can be used on login pages and any other place on your site where people interact with it. If your website notices that a single source is sending a lot of traffic a CAPTCHA challenge can help you figure out if the traffic is coming from a real person or a bot.

Invisible CAPTCHAs an innovative feature of Google’s reCAPTCHA add an extra layer of security without bothering real users.

While CAPTCHAs are effective, they should be used sparingly to avoid irritating users. Pairing them with other strategies like rate limiting and IP blocking ensures robust protection while maintaining a smooth user experience.

It’s a simple yet powerful tool to keep scrapers at bay!

Bot Detection Software

The software like BotGuard or PerimeterX can stop harmful bots that are trying to attack your website. In order to tell real human users from artificial bots, these tools use behavior analysis.

By looking at things like click patterns they keep track of what users are doing. They also help you to find any strange behavior. Bots send requests without dealing with the page like a real user would.

These services can challenge the bot once they find it stopping it from accessing to your content.They also offer features like rate limiting, CAPTCHA integration, and IP blocking to enhance protection.

Bot detection software works very well but you should pick a solution that fits the goals of your website. While keeping the experience for legitimate users seamless these unusual activity indicative of scraping attempts can be greatly reduced.

User-Agent & Referrer Header Checking

User-agent and referrer headers provide valuable information about the source of a website request. You can find strange traffic that might be caused by scraping by looking at these headers.

The user-agent field tells you what kind of browser sent the request. While bots use sketchy user-agents like Python-requests. But the legitimate users have standard user-agents like Chrome.

The page that sent the person to your site is shown in the referral bar. Scrapers often don’t follow normal browser rules so they might not send a reference.

You can find possible scrapers early on by looking for headless browsers on a regular basis. You can stop a request if the IP address seems fishy. This method is easy to use to block annoying bot traffic without affecting real users.

Employing Web Application Firewalls

A Web Application Firewall plays an essential role in protecting your website from malicious traffic, including scraping bots. WAFs residing between your computer as well as the traffic by filtering request as they come in.

Based on strange request rates or known attack signatures they can stop harmful traffic. WAFs are very good at stopping scraping attempts because they look at bots’ behavior before they even get to your website. They also have extra security features that keep you safe from DDoS attacks.

When choosing a WAF Cloudflare or AWS WAF are good options because they can find bots well. These services have security rules set up which makes it easy to keep up strong defense. When used with other security measures a WAF can greatly lower the chances of scraping.

Obfuscating Website Content

Making it harder for scrapers to get useful information from websites is what obfuscating website content means. Common ways to do this are to hide content in JavaScript or encode text in a way that bots cannot comprehend. Showing data as pictures or breaking up text into small can confuse scrapers.

Pros:

Prevents easy data extraction

Because the material isn’t in a simple style scrapers have a hard time getting to the essential components.

Improves data protection

Makes it more difficult for competitors or malicious actors to steal valuable information.

Cons:

Impact on user experience

For legitimate users dynamic loading might make pages take longer to load.

Not always sure

Advanced scrapers can still get around obfuscation techniques by using complex algorithms.

Session & Cookie Tracking

Session and cookie tracking is a method to differentiate between real users and bots by monitoring user activity over time. When a legitimate user visits your site, a session is created, and cookies are stored in their real browser.

These cookies help track their actions and ensure they are interacting with your site in a normal way.

Bots, on the other hand, typically don’t handle sessions or cookies like real users. They might not store cookies or they might send requests with session data that isn’t quite right. You can stop bots from scraping your site by keeping an eye on these actions.

By adding another layer of analysis session & cookie tracking makes it easier to spot bots. But it’s important to use this with other scraping tools like rate limiting to protect against them.

Web Scraping Detection Services

These services stop scraping attempts in real time before they can do any damage. In order to tell legitimate users from harmful bots these services use sophisticated algorithms to analyse user friendly behavior.

By constantly watching the requests that come in they can spot strange things like requests that come from IP addresses that are known to be suspicious.

A lot of people use services like BotGuard to protect themselves automatically by stopping any behavior that seems fishy. They can even use CAPTCHAs to stop scrapers or block whole IP ranges that do suspicious activity.

The advantage of using these professional scraping services is their ability to detect scraping in real time, allowing you to respond immediately without manual intervention.

They may cost money while certain methods may need to be modified to meet the needs of your website. When you use these services with other security measures you get strong safety.

Protect Your Brand & Recover Revenue With Bytescare's Brand Protection software

Best Practices for Website Protection

To effectively protect your website from scraping attacks, adopting a combination of best practices is key. Here are some essential steps to safeguard your content and data:

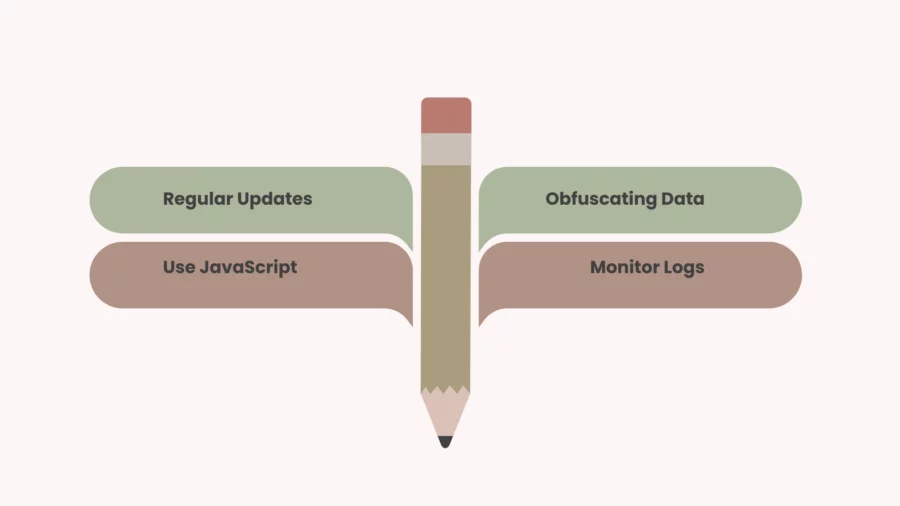

Regular Updates

Always make sure that your website’s plugins in addition to security patches on your website are up to date. Cybercriminals often use old software to get around security measures. By keeping up with changes you lower the chance that scrapers will take advantage of security gaps.

Obfuscating Data

Price scraping can make it much more difficult for scrapers to get useful information by obscuring valuable data. Encoding text or using JavaScript to load content on the fly can make automatic tools confused. This makes it harder for them to scrape your website.

Use JavaScript

Making it harder to scrape is possible by rendering material automatically with JavaScript. Bots are meant to work with static HTML so it can be hard for them to get info from pages that use a lot of JavaScript. Add an extra layer of security against scrapers by adding JavaScript to your site.

Monitor Logs

Scraping attempts can be found by checking Google Analytics. Look for trends like a lot of traffic coming from a single IP address. By being proactive you can find scraping behavior early to stop the threat.

Legal Measures to Protect Your Website

For basic scraping legal steps can be just as important as technical ones when it comes to protecting your website. To do this follow these important steps:

Terms of Service

One of the most effective ways to legally protect your website is by creating and enforcing clear terms of service. These terms should make it clear that scraping is not allowed. You should also outline what will happen if this rule is broken.

By making it clear that scraping is not allowed you give the law a reason to punish people who do it. Make sure that the terms that cover scraping to make sure that you are fully protected.

Copyright & Trademark Protection

Ensure that your website content is protected under copyright protection law. Copyright laws automatically protect original works of authorship, such as written content, images, and code.

You can stop scrapers from using your content without your permission by claiming your copyright rights. Registering your content can make it easier to take legal action if it is stolen.

Cease and Desist Letters

If you identify a scraper violating your website’s terms or copyright, sending a cease and desist letter can be an effective first step. This letter formally requests the scraper to stop their activity immediately and can be sent to the offending party or their hosting provider.

If ignored, further legal actions can be taken, such as filing a Digital Millennium Copyright Act (DMCA) takedown notice or pursuing a lawsuit.

Challenges in Protecting Websites from Scraping

Protecting your website from scraping can be a difficult and ongoing challenge, with several factors to consider:

Difficulty of Completely Stopping Scraping

Completely stopping web scraping is nearly impossible. While you can implement a variety of technical and legal measures, determined scrapers can often find ways to bypass them.

They may use advanced techniques like rotating IP address basis, disguising their user-agents, or mimicking human interactions.

The tools available to scrapers are becoming increasingly sophisticated, making it harder to differentiate between legitimate users and malicious bots. This means that while you can significantly reduce scraping, eliminating it entirely remains a challenge.

Balancing Accessibility

One of the key difficulties is striking the right balance between accessibility for legitimate users and protection against scrapers. Measures like CAPTCHAs or rate limiting can slow down bots, but they may also frustrate real users.

If security measures are too aggressive, they can degrade the user experience, which could lead to revenue loss. Therefore, it’s essential to implement protections that minimise disruption for genuine user interactions while deterring scrapers.

Ongoing Need for Monitoring

Scraping techniques are constantly evolving, and new methods are regularly developed to bypass existing protections. This means that website owners must continuously monitor traffic, update security measures, and adapt to hidden threats.

A static defense system can quickly become outdated, so staying proactive and flexible is essential to maintaining robust protection against malicious web scraping.

Tools for Website Scraping Protection

| Cloudflare | A popular CDN and security service offering bot protection and DDoS mitigation. |

| AWS Web Application Firewall | A firewall service that protects web applications from common threats. |

| PerimeterX | A bot mitigation service that uses machine learning to detect and block bots. |

| BotGuard | A bot detection and mitigation platform designed to prevent malicious traffic. |

| Sucuri | A website security platform that provides malware scanning and bot protection. |

| Distil Networks | A bot detection platform that helps protect websites from scrapers and fraud. |

| DataDome | An AI-powered bot protection service that detects and blocks scraping attempts. |

| ScrapeShield | A bot management solution offering protection from scraping and content theft. |

What’s Next?

Protecting your website from scraping requires a multi-layered approach that combines technical, legal, and ongoing monitoring strategies.

Implementing measures like robots.txt, CAPTCHA-solving services, rate limiting, and IP blocking can significantly reduce the chances of scraping, while tools like Web Application Firewalls and bot detection services offer robust bot protection solution against advanced scraping techniques.

However, it’s important to strike a balance between securing your actual content and maintaining a positive user experience. Additionally, continuously adapting to new scraping methods and regularly monitoring traffic is essential to staying ahead of potential threat actors.

By using these scraping tools, you can safeguard your website’s data, preserve its performance, and minimise the impact of malicious purposes.

Protect your brand with Bytescare’s comprehensive Brand Protection Solutions. Our advanced system monitors for unauthorised use, phishing, and trademark infringement.

With proactive brand name scanning and trademark detection, safeguard your brand’s identity across the digital space. Ensure your reputation stays secure—contact us today for peace of mind!

The Most Widely Used Brand Protection Software

Find, track, and remove counterfeit listings and sellers with Bytescare Brand Protection software

FAQs

Is it possible to prevent web scraping?

Completely preventing web scraping is difficult, but you can reduce its impact by using a combination of techniques like CAPTCHA, rate limiting, IP blocking, and advanced bot detection tools. However, determined scrapers may still find ways around protections.

Can a website block scraping?

Yes, a website can block scraping by implementing measures like IP blocking, CAPTCHA, robots.txt files, and Web Application Firewalls (WAFs). While these methods can be deterrent for scrapers, advanced bots may still bypass some of these types of scraper protections.

Can you detect web scraping?

Yes, web scraping can be detected by monitoring unusual traffic patterns, such as rapid requests from a single IP, missing referrer headers, or abnormal user-agent behavior. Bot detection software and server log analysis tools can help identify scraping attempts in real time.

How does a robots.txt file help in preventing web scraping?

A robots.txt file can guide search engines and bots by disallowing access to certain pages, preventing scraping of specific content. However, it’s not foolproof since malicious bots may ignore these instructions and still scrape the site.

What are the legal implications of web scraping?

Web scraping may violate copyright laws, terms of service agreements, and data protection regulations. Scrapers can face legal consequences, including cease-and-desist orders or lawsuits for unauthorised data extraction or misuse of protected content.

What is web scraping, and why should I be concerned about it?

Web scraping is the process of extracting data from websites using automated tools. It’s a concern because it can lead to content theft, intellectual property violations, and proxy server overload, potentially affecting your website’s performance and revenue.

Ready to Secure Your Online Presence?

You are at the right place, contact us to know more.