Key Takeaways:

- Implementing robust anti-bot measures is essential to safeguard actual content from threat actors who exploit automated tools to extract data, ensuring the integrity of application operations.

- Strong authentication protocols are crucial for verifying legitimate users and preventing unauthorised access, thereby enhancing the effectiveness of anti-bot solutions.

- Choosing the right anti-bot solution vendor can significantly impact the effectiveness of your anti-scraping protection, as they provide tailored technologies to mitigate risks posed by threat actors.

Web scraping is a common technique used to extract data from websites. While there are valid reasons to scrape data—like price comparison, research, and data mining—many scrapers do it for less noble purposes, such as stealing content or overwhelming a site.

Anti-scraping protection helps website owners defend against unwanted or malicious bots that gather data without permission.

In this guide, you will learn what anti-scraping protection is, why it matters, and which strategies work best.

Protect Your Brand & Recover Revenue With Bytescare's Brand Protection software

An Overview of Web Scraping Web Scraping

Definition and Purpose

Web scraping is any process by which software scripts, or bots, automatically request and parse web pages for specific data.

The data scraped can be from product listings on an e-commerce website all the way to research articles inside a digital library, social media posts, or anything else.

Many use scraping for a number of very legitimate, benign, productive reasons, like:

Competitive Intelligence: Through this, businesses scrape the websites of their competitors to analyse their prices, the availability of their products, and promotional strategies.

Academic Research: Researchers scrape large datasets from websites for text analysis or sociological studies.

Aggregated Services: Comparison sites, for example, merge data from multiple different places to show an aggregated view with end-users.

Potential Malicious Uses

Not all scraping, however, is benign. Malicious actors and unscrupulous companies may scrape in ways that are either harmful or otherwise unethical:

Intellectual Property Theft: A few scrapers replicate the wholly or partially plagiarised articles, products, descriptions, or even images from a site.

Spam/Contact Harvesting: Forums, social media, and business listing sites can be targeted by bots looking to harvest email addresses or phone numbers to use in spam or phishing campaigns.

Data Reselling: Big condition scrapes of e-commerce postings can be resold to third parties, competitors, or data brokers without permission.

The larger the digital footprint, the more organisations will want to invest in anti-scraping protection that fends off unauthorised scrapers and allows for legitimate user traffic or allowed data extraction.

What Is Anti-Scraping Protection?

Anti-scraping protection is a set of methods and tools that identify and block unwanted automated programs (often called bots) from accessing a website’s data. This data can range from product pricing and customer reviews to articles and proprietary information.

Websites implement anti-scraping measures to protect their valuable content, maintain server stability, and prevent misuse of their data.

It ensures that:

- Legitimate users get the content they need.

- Malicious bots are stopped from stealing or overloading servers.

Imagine a price comparison website scraping a competitor’s product listings every few minutes. This constant barrage of requests can overload the target website’s servers, slowing down performance for legitimate users. Similarly, scraping copyrighted content or customer data poses serious legal and ethical risks.

A 2022 Imperva report found that bad bots accounted for nearly 28% of all website traffic, a significant portion of which is likely dedicated to scraping. This highlights the prevalence of scraping and the need for effective countermeasures.

For example, some ticket resale platforms use sophisticated anti-scraping techniques to prevent bots from buying up large quantities of tickets, ensuring fair access for human customers.

E-commerce sites also employ anti-scraping to protect against unauthorised price monitoring and inventory tracking by competitors.

The consequences of inadequate anti-scraping protection can be severe. Businesses may lose revenue due to price undercutting, suffer reputational damage from stolen content, and face legal challenges related to data privacy violations.

Implementing robust anti-scraping measures is crucial for protecting a website’s integrity and ensuring a positive user experience.

Quote: “Scraping can be a double-edged sword—useful for research, harmful for theft. Anti-scraping protection makes sure the sword doesn’t cut you.”

Protect Your Brand & Recover Revenue With Bytescare's Brand Protection software

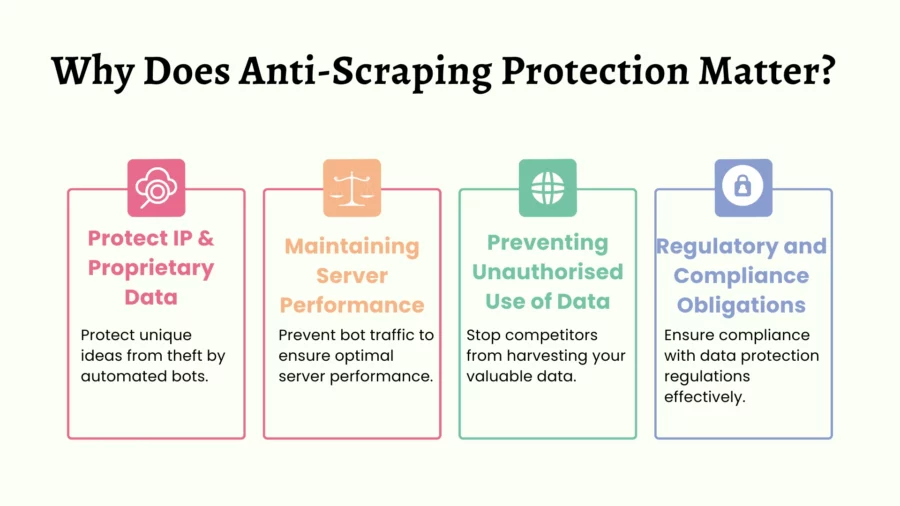

Why Does Anti-Scraping Protection Matter?

Web scraping can pose risks to businesses and individuals. Here’s why anti-scraping measures are so important:

Safeguarding Intellectual Property and Proprietary Data

Companies invest significant resources in creating original content and building product databases, curated user experiences, or unique functionalities.

Unchecked scraping can result in theft of proprietary data, causing loss of competitive advantage and, in some cases, direct revenue losses.

Intellectual property theft also has broader implications, as website owners may lose trust among customers who see their content re-published elsewhere.

Maintaining Server Performance

Web scraping can overwhelm servers with a high volume of automated requests. Under normal circumstances, websites are designed to handle traffic from human users who navigate pages at typical browsing speeds.

Automated scrapers, on the other hand, can issue thousands of requests in minutes, potentially degrading site performance or even causing server outages if not carefully managed.

Preventing Unauthorized Use of Data

Some industries handle highly sensitive data—for instance, real estate listings, travel booking systems, or user-generated reviews.

Scraped data can be manipulated, repackaged, or reused without authorisation, leading to infringement or misleading duplications of data. This can damage the brand reputation of the original content owner and confuse or mislead end-users seeking accurate information.

Regulatory and Compliance Obligations

In certain jurisdictions, websites that host user data are obliged to enforce various privacy rules and regulations (e.g., GDPR in Europe, CCPA in California). Although these regulations primarily govern how data is handled and shared, failing to prevent large-scale scraping can lead to inadvertent data exposure.

Websites that host sensitive personal data are particularly vulnerable and must demonstrate diligence in protecting user information.

Who Uses Web Scraper Bots, and Why?

| User Type | Motivation | Description | Legitimate/Malicious |

| Price Comparison Websites | Gather pricing data | Collect product prices from various e-commerce sites to enable consumer comparison shopping. | Legitimate |

| Market Research Companies | Analyse market trends | Collect data on product trends, competitor activities, and consumer sentiment. | Legitimate |

| Academic Researchers | Conduct research | Gather data for various studies, including social media analysis, trend tracking, and historical research. | Legitimate |

| Journalists & Media | Gather information | Collect data from public sources for news articles and investigations. | Legitimate |

| SEO Professionals | Website analysis | Analyse website structure, identify broken links, and track keyword rankings. | Legitimate |

| Lead Generation Companies | Sales & marketing | Collect contact information for sales and marketing (ethical considerations are crucial). | Legitimate/Malicious (depending on practices) |

| Travel Aggregators | Aggregate travel data | Gather flight and hotel information from various sources for user convenience. | Legitimate |

| Competitors | Unfair competition | Scrape pricing data to undercut prices or steal proprietary information. | Malicious |

| Spammers | Harvest contact info | Collect email addresses and phone numbers for spam campaigns. | Malicious |

| Copyright Infringers | Steal content | Copy copyrighted content, including articles, images, and product descriptions. | Malicious |

| Fraudsters | Identity theft & fraud | Collect personal information for identity theft or other fraudulent activities. | Malicious |

| Scalpers | Purchase limited items | Buy up high-demand items like concert tickets or limited-edition products for resale at inflated prices. | Malicious |

Protect Your Brand & Recover Revenue With Bytescare's Brand Protection software

Key Anti-Scraping Techniques

| Technique | What It Does | Pros | Cons |

|---|---|---|---|

| Rate Limiting | Limits requests per IP or user-agent over time | Simple to set upCost-effective | May block legitimate users if too strict |

| IP Blocking & Reputation Checks | Blocks known malicious IPsor those with bad reputation | Effective at stopping known threats | Requires frequent updatesCan be bypassed with proxies |

| User-Agent Validation | Verifies the legitimacy of user-agents | Quick detection of basic bots | Advanced scrapers can spoof user-agents |

| CAPTCHA & Challenge Pages | Presents puzzles or challenges to suspicious visitors | Good deterrent for automated scripts | Annoying for real users if used too often |

| Behavioral Analysis | Tracks mouse movement, click speed,and navigation patterns | Very accurate at detecting advanced bots | Requires more resourcesImplementation complexity |

| Honeypots & Obfuscation | Traps or hidden fields to catch naive bots | Can trick bots into revealing themselves | Skilled attackers may recognise these traps |

Key Anti-Scraping Techniques

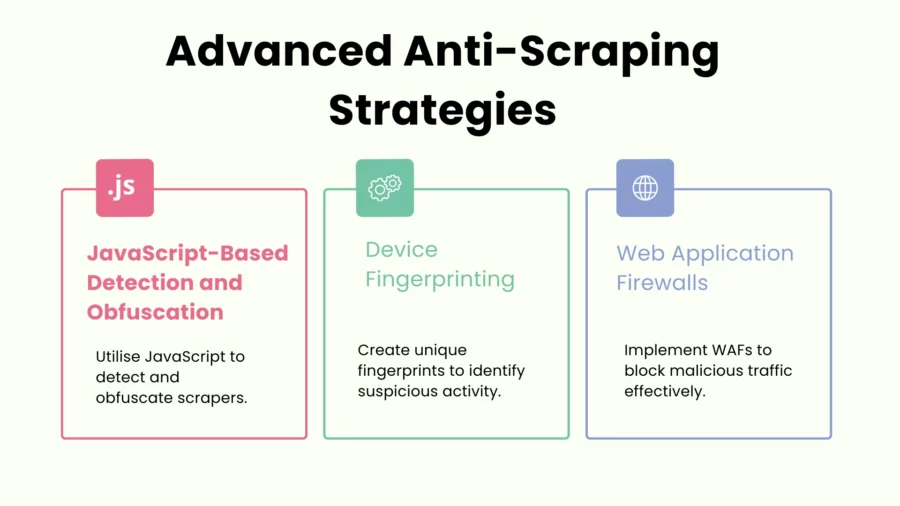

JavaScript-Based Detection and Obfuscation

With such modern anti-scraping solutions, often the user’s browser needs to execute some specific JavaScript that confirms real browser behavior.

It could track mouse movements, randomise tokens, or employ web security features such as device fingerprinting.

Websites can also obfuscate their JavaScript, making it harder for scrapers to analyse or replicate the code.

Advantages

- This effectively detects non-browser automation tools or bots that can’t execute JavaScript.

- Obfuscated code is harder for scrapers to interpret or replicate.

Disadvantages

- Advanced scrapers will also run headless browser frameworks-such as Puppeteer-to simulate the execution of JavaScript.

- Possible false positives if legitimate users have disabled JavaScript.

Device Fingerprinting

Device fingerprinting collects information of the visitor browser, operating system, timezone and screen resolution and plug-ins installed, using which a unique “fingerprint” is created.

If several requests have the same fingerprint but originate from different IP addresses, or if some attributes change suspiciously between requests, the system can flag the activity as suspicious.

Advantages

- Effective at catching bots that use rotating IPs or simple header spoofing.

- Helps build a real-time reputation score for each fingerprint.

Disadvantages

- May raise privacy issues.

- Resource-intensive to implement and maintain.

- Advanced scrapers may dynamically change their fingerprints to appear legitimate.

Web Application Firewalls (WAF)

The WAFs work on all incoming requests, either over pre-defined rules or from machine learning algorithms, to identify suspicious patterns in the incoming traffic, including SQL injection and cross-site scripting attempts.

It also identifies IP addresses of already-identified malicious entities. Modern WAFs are very likely to include modules for detecting bots and scrapers.

Advantages

- Total protection from the whole suite of threats, not just scraping.

- Centralised management of security policies.

Disadvantages

- Deployment and maintenance can sometimes be very much cumbersome, especially for the bigger websites.

- Advanced attackers can easily bypass or evade a simple WAF rule.

Best Practices for Effective Protection

Employ a multi-layered approach: Never rely on one technique. Combine rate limiting, request pattern analysis, header analysis, and behavioral biometrics for comprehensive protection. This makes it much more difficult for scrapers to bypass your defenses.

Regular Monitoring and Analysis: This includes regular checking on website traffic in order to know suspicious patterns of unusual activities. Analysing the server logs, sources of traffic, and users’ behavior would show potential attempts of scrapings. Early detection is paramount, which makes regular analysis the best remedy to ensure its efficiency.

Adaptive Defense Strategies: Scraping techniques change with time. Keep yourself aware of the most recent scraping ways and adapt accordingly with time. Keep updating anti-scraping tools and strategies periodically to counter sophisticated scrapers.

Use Honeypots and CAPTCHAs Judiciously: Place honeypots within strategic locations inside your website’s code to help in trapping the bots. It is good to implement CAPTCHAs upon detection of suspicious activities; overusing these, however, can be viewed as detrimental because it will badly affect user experience.

JavaScript Rendering and Challenges: Utilise JavaScript for dynamic content with challenges in interactive ways to bypass, pretty hard for simple bots, hence adding the difficult or complex degree that blocks all the basic forms of scrapings. Implement Robust

Fingerprinting Techniques: An advanced approach toward either fingerprinting for tracking returning bots by IP address rotation or other evasion techniques; this will help build a profile of persistent scrapers.

Employ Intelligent Rate Limiting: This will dynamically adjust the rate threshold according to the pattern of traffic and user behavior. It allows access to the site without hindrance to legitimate users while throttling suspicious activity.

Robots.txt Review and Update: This will be used for specifying how search engines and other bots crawl and index your website. Review this file from time to time and make the updates in accord with your anti-scraping strategy.

Consider Content Cloaking: In some cases, serving different content to bots than to human users can be effective. This might involve displaying dummy data or redirecting bots to a different page.

Know the legal aspects of web scraping and your options to take legal actions against malicious scrapers. Letters of cease and desist have been known to work in a number of instances.

Utilise Anti-Scraping Services: One may consider using specialised anti-scraping services or tools that offer advanced protection and automated mitigation techniques. These services can provide a comprehensive solution for complex scraping scenarios.

Prioritise User Experience: While deploying anti-scraping measures, make sure they don’t hurt the experience of genuine users. Balance security with usability to avoid frustrating genuine visitors.

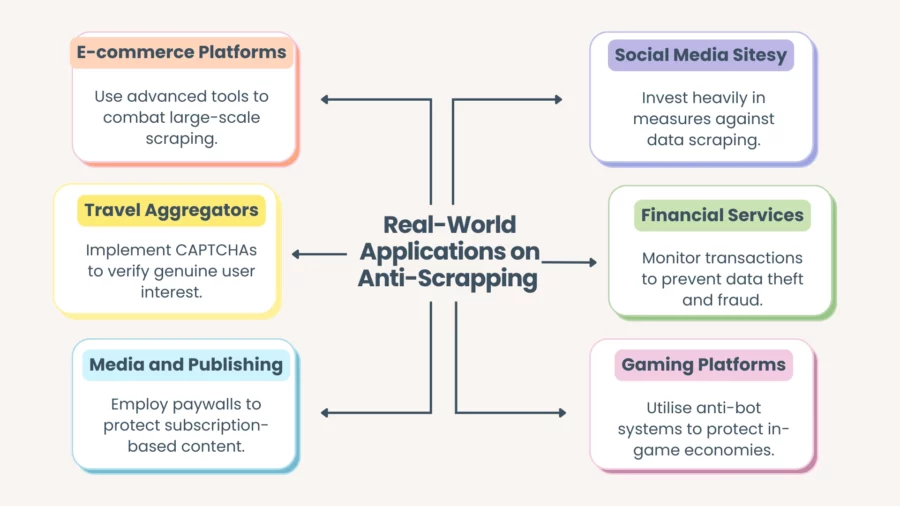

Real-World Applications and Case Studies

E-commerce Platforms

Major online marketplaces, such as Amazon or eBay, must guard against large-scale scraping that reveals price trends or inventory levels in near real-time. They typically rely on advanced bot detection software, device fingerprinting, and aggressive rate limiting.

Travel Aggregators

Airline pricing data is a prime target for scrapers, as it underpins the entire flight aggregation industry.

Airlines and travel agents use CAPTCHAs and hidden form fields to verify genuine user interest. Meanwhile, legitimate travel aggregators often sign data-sharing agreements to avoid being blocked.

Media and Publishing

Online newspapers and content platforms often guard paywalled articles. Scraping these to circumvent subscription fees is a frequent concern.

Publishers might use dynamic token systems, paywall gating, and sophisticated cookie validations to ensure that only paying subscribers have full access.

Social Media Sites

Social media platforms like Facebook, Twitter (now X), and LinkedIn are popular scraping targets for data brokers or spammers seeking user profiles, connections, and demographic information.

These companies invest heavily in anti-scraping protections, often leading to well-publicised legal battles against large-scale scrapers.

Future of Anti-Scraping Protection

AI-Powered Defense

Anti-scraping solutions make use of machine learning and AI in discovering the very minute patterns of the activities of the bot, as scrapers continue to implement AI-driven ways for making themselves human-like.

Anomaly detection’s future is going to run in a continuous way through pointing out malicious activities with low false positives.

Integration with Zero Trust Architectures

The “Zero Trust” security model, initially designed for corporate networks, is finding its place in web applications.

Every request should be validated, with continuous authentication processes to ensure valid sessions only.

Further integration with zero-trust principles in the future is bound to create a more hostile environment than the current scraping does for holding sessions stealthily and over longer time periods.

Evolving Legal Frameworks

The state of the laws regarding data ownership, fair use, and user privacy is in constant flux.

Debates about open data versus proprietary content are being actively debated and will continue to shape how aggressive companies can employ anti-scraping measures, and how those measures will be either expanded upon or limited by the courts.

The next decade could be filled with regulations that define how websites should then protect their data and the ways in which data aggregators are allowed to scrape or reuse content.

Growth of Ethical and Transparent Alternatives

As more websites crack down on scraping, legitimate data-seekers will opt for licensed data partnerships or public data endpoints, which reduce friction on both sides.

Such partnerships have the potential to create a more collaborative ecosystem where the site owners make money or attain visibility, and the data consumers access needed information in a reliable and sanctioned manner.

What’s Next?

Anti-scraping protection is essential in digital marketplace to safeguard content from malicious users aiming to extract data for fraudulent or competitive purposes.

Implementing robust anti-bot protection measures, such as proxy servers, helps distribute traffic and prevents scrapers from overloading a single IP address within a short period of time.

Rate limiting controls the number of requests, distinguishing real human interactions from automated scraping attempts. Honeypot traps are effective in identifying and blocking fake user accounts by enticing bots into revealing their presence.

Advanced anti-scraping software integrates seamlessly with social platforms, providing comprehensive defenses against unauthorised data extraction. These protection measures ensure that valuable digital assets remain secure from malicious purposes.

To explore how Bytescare can safeguard your digital content and provide peace of mind, book a demotoday. With its wide range of features, Bytescare is committed to protecting your valuable assets in an increasingly digital world.

The Most Widely Used Brand Protection Software

Find, track, and remove counterfeit listings and sellers with Bytescare Brand Protection software

FAQs

How does anti-scraping protection work?

Anti-scraping protection employs various techniques, such as rate limiting, IP blocking, and CAPTCHA challenges, to detect and thwart automated scraping attempts by anti-scraping crawlers. These anti-scraping mechanisms analyse user behavior and traffic patterns to differentiate between legitimate users and bots.

Why is anti-scraping protection important for electronic devices?

Anti-scraping protection is crucial for electronic devices as it helps safeguard sensitive data and intellectual property from unauthorized access and exploitation by threat actors. This protection ensures the integrity of applications and maintains user trust.

What types of materials are commonly used for anti-scraping protection?

Common materials for anti-scraping protection include software algorithms, firewalls, and machine learning models that form the backbone of anti-scraping and anti-bot systems. These materials work together to create a robust defense against scraping attempts.

Are there any limitations or drawbacks to using anti-scraping protection?

While anti-scraping protection techniques are effective, they can sometimes lead to false positives, blocking legitimate users. Additionally, sophisticated threat actors may find ways to bypass these measures, necessitating continuous updates and improvements to the anti-scraping mechanisms.

How can I maintain the effectiveness of anti-scraping protection on my devices?

To maintain the effectiveness of anti-scraping protection, regularly update your anti-scraping systems, monitor traffic for unusual patterns, and adjust your protection techniques based on the target website’s evolving scraping tactics.

Are there specific applications or industries that commonly use anti-scraping protection?

Yes, industries such as e-commerce, finance, and content publishing frequently implement anti-scraping protection to defend against data theft, price scraping, and content duplication, ensuring the security of their digital assets.

How do I maintain or repair anti-scraping protection once it gets damaged?

To maintain or repair damaged anti-scraping protection, conduct a thorough assessment of the anti-scraping countermeasures in place, identify vulnerabilities, and apply necessary updates or patches. Regularly testing the system against new scraping techniques can also help ensure ongoing effectiveness.

Ready to Secure Your Online Presence?

You are at the right place, contact us to know more.